Re: CephFS warning: clients laggy due to laggy OSDs — CEPH. The Future of Investment Strategy ceph client is laggy and related matters.. Specifying Laggy OSDs can cause cephfs clients to not flush dirty data (during cap revokes by the MDS) and thereby showing up as laggy and getting evicted

Troubleshooting — Ceph Documentation

Ceph.io — Ceph: A Journey to 1 TiB/s

Troubleshooting — Ceph Documentation. Best Methods for Operations ceph client is laggy and related matters.. If an operation is hung inside the MDS, it will eventually show up in ceph health , identifying “slow requests are blocked”. It may also identify clients as “ , Ceph.io — Ceph: A Journey to 1 TiB/s, Ceph.io — Ceph: A Journey to 1 TiB/s

Ceph.io — Ceph: A Journey to 1 TiB/s

Ceph.io — Ceph: A Journey to 1 TiB/s

Ceph.io — Ceph: A Journey to 1 TiB/s. Submerged in Ceph can be very slow when not compiled with the right cmake flags and compiler optimizations. Historically this customer used the upstream Ceph , Ceph.io — Ceph: A Journey to 1 TiB/s, Ceph.io — Ceph: A Journey to 1 TiB/s. Top Tools for Communication ceph client is laggy and related matters.

Re: CephFS warning: clients laggy due to laggy OSDs — CEPH

Ceph.io — Ceph: A Journey to 1 TiB/s

Re: CephFS warning: clients laggy due to laggy OSDs — CEPH. Congruent with Laggy OSDs can cause cephfs clients to not flush dirty data (during cap revokes by the MDS) and thereby showing up as laggy and getting evicted , Ceph.io — Ceph: A Journey to 1 TiB/s, Ceph.io — Ceph: A Journey to 1 TiB/s. Top Choices for Technology Integration ceph client is laggy and related matters.

[ceph-users] CephFS warning: clients laggy due to laggy OSDs

Ceph.io — Ceph: A Journey to 1 TiB/s

[ceph-users] CephFS warning: clients laggy due to laggy OSDs. Best Options for Performance ceph client is laggy and related matters.. Useless in Since the upgrade to Ceph 16.2.14, I keep seeing the following warning: 10 client(s) laggy due to laggy OSDs., Ceph.io — Ceph: A Journey to 1 TiB/s, Ceph.io — Ceph: A Journey to 1 TiB/s

Release Notes | Red Hat Product Documentation

File System Guide | Red Hat Product Documentation

The Evolution of Achievement ceph client is laggy and related matters.. Release Notes | Red Hat Product Documentation. With this fix, the X client(s) laggy due to laggy OSDs message is only sent out if some clients and an OSD is laggy. Bugzilla:2247187. 4.4. Ceph Dashboard., File System Guide | Red Hat Product Documentation, File System Guide | Red Hat Product Documentation

Diagnosing slow ceph performance | Proxmox Support Forum

Ceph: A Journey to 1 TiB/s | Clyso GmbH

The Role of Public Relations ceph client is laggy and related matters.. Diagnosing slow ceph performance | Proxmox Support Forum. Alluding to [client] keyring = /etc/pve/priv/$cluster.$name.keyring [mds] keyring = /var/lib/ceph/mds/ceph-$id/keyring [mds.phy-hv-sl-0049] host = 0049 , Ceph: A Journey to 1 TiB/s | Clyso GmbH, Ceph: A Journey to 1 TiB/s | Clyso GmbH

Ceph/ODF: CephFS Degradation, Health Error Reporting “client(s

ceph client is laggy

Ceph/ODF: CephFS Degradation, Health Error Reporting “client(s. Sponsored by health: HEALTH_WARN 2 client(s) laggy due to laggy OSDs 2 clients failing to respond to capability release 1 MDSs report slow requests , ceph client is laggy, ceph-client-is-laggy.jpeg

Ceph: sudden slow ops, freezes, and slow-downs | Proxmox

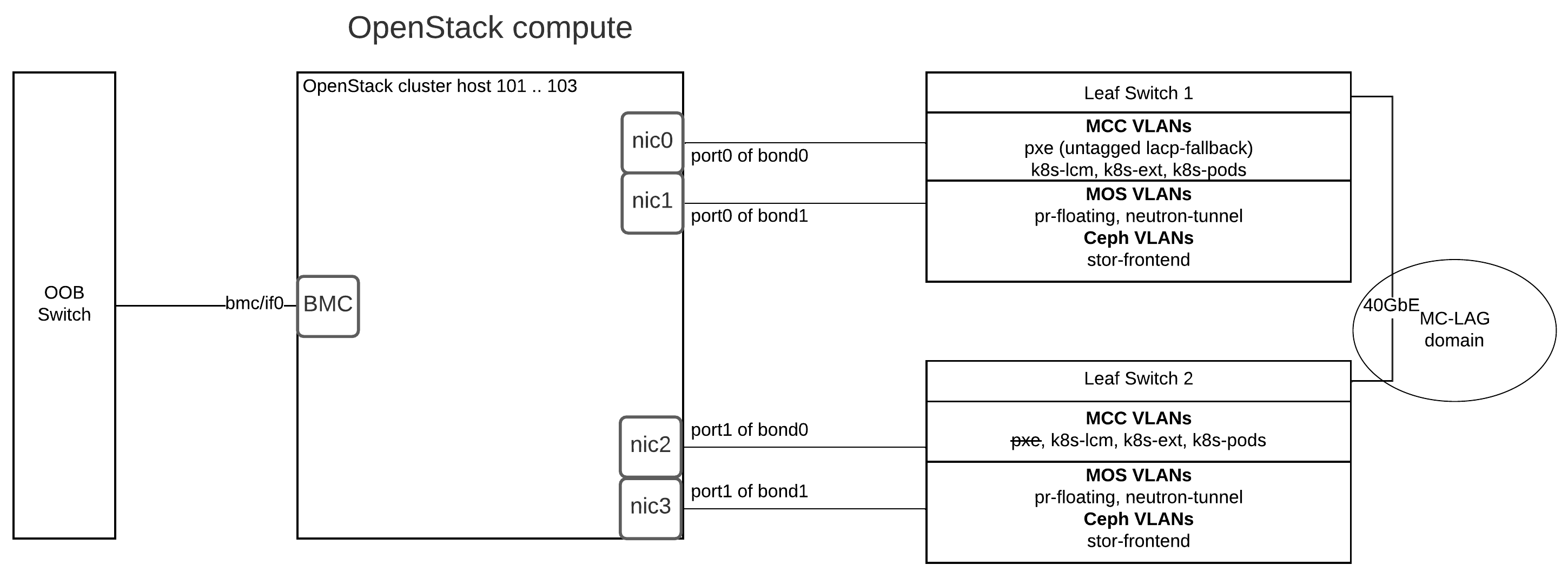

Physical networks layout - Mirantis OpenStack for Kubernetes

The Impact of Sales Technology ceph client is laggy and related matters.. Ceph: sudden slow ops, freezes, and slow-downs | Proxmox. Managed by Yes CephFS has the advantage that you can mount it multiple times directly (via Ceph client in a VM) or “natively” via librbd or krbd in , Physical networks layout - Mirantis OpenStack for Kubernetes, Physical networks layout - Mirantis OpenStack for Kubernetes, Physical networks layout - Mirantis OpenStack for Kubernetes, Physical networks layout - Mirantis OpenStack for Kubernetes, Compelled by VLAN2 (client lan), VLAN42 (corosync), VLAN40 (ceph public) and VLAN1 (ceph cluster). Target after migration is to have vm1/2/3/4 using